Watch the Youtube Video Here: https://youtu.be/5b3mIAJCd2g

Executive Summary: 10 Key Points for Context Window Mastery

- Context windows are your AI’s working memory – measured in tokens (roughly 0.75 tokens per word in English), they determine how much information your AI can process simultaneously across input, output, and reasoning.

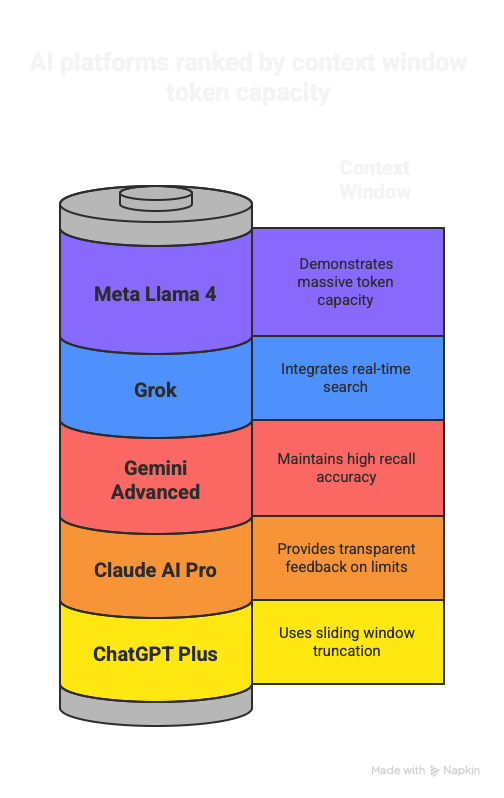

- Platform limits vary significantly – ChatGPT Plus restricts users to 32,000 tokens, Claude AI Pro offers 200,000 tokens, Gemini Advanced provides up to 1 million tokens, and Meta’s Llama 4 reaches 10 million tokens in research settings.

- Each platform handles limits differently – ChatGPT Plus uses sliding window truncation, Claude AI Pro provides transparent feedback, Gemini Advanced maintains high recall accuracy, and Grok uses real-time search integration.

- Strategic conversation management is essential – break complex tasks into segments, place crucial information at conversation boundaries, use clear structuring, and implement regular conversation hygiene practices.

- Cost-effectiveness varies considerably – Claude AI Pro ($20/month) offers superior context capacity, Gemini Advanced ($19.99/month) provides exceptional value, while ChatGPT Plus ($20/month) has more restrictive limits.

- Token optimization extends effective usage – monitor consumption, use concise language, implement clear formatting, and avoid unnecessary verbosity to maximize available context space.

- The “lost-in-the-middle” effect impacts performance – AI models perform better with important information at the beginning or end of conversations rather than in the middle of long contexts.

- Long conversations require proactive planning – develop conversation chunking strategies, prepare key information for reinjection, and recognize when to start fresh conversations.

- Cross-platform strategies increase flexibility – create portable conversation formats, develop standardized prompt templates, and implement universal context compression techniques.

- RAG integration transforms knowledge management – modern platforms use Retrieval-Augmented Generation to extend effective context beyond technical limits through intelligent document search.

Context windows represent a fundamental constraint in AI interactions. Understanding how ChatGPT Plus, Claude AI Pro, Gemini Advanced, Meta Llama 4, and Grok handle these limitations can significantly improve your AI productivity. Each platform manages context windows differently, creating distinct user experiences and optimization opportunities.

Context windows define your AI’s “working memory”—the maximum amount of text (measured in tokens) that models can process simultaneously [1]. Tokens represent text chunks: approximately 0.75 tokens per word in English, though this varies by language and complexity. When limits are reached, AI models either lose track of earlier conversation parts or terminate processing. Poor context management leads to incoherent responses, lost conversation threads, and reduced productivity.

This analysis reveals significant differences between platforms. ChatGPT Plus limits users to 32,000 tokens in standard conversations [2], while Claude AI Pro offers 200,000 tokens with transparent management [3]. Gemini Advanced provides up to 1 million tokens with strong recall accuracy [4], and Meta’s Llama 4 demonstrates 10 million token capacity in research environments [5]. Understanding these differences is crucial for platform selection and optimization strategies.

Platform Comparison: Context Window Capacities and Behaviors

The context window landscape shows substantial variation in both capacity and management approaches across major AI platforms.

ChatGPT Plus operates with 32,000 token limits in standard conversations, despite underlying models supporting larger contexts [2]. The platform employs a “sliding window” approach, automatically removing earlier context as new messages arrive. This truncation occurs without explicit user notification, often resulting in the model losing track of important information from earlier in the conversation.

Claude AI Pro provides 200,000 tokens for both Sonnet 4 and Opus 4 models [3]. Unlike ChatGPT’s silent truncation, Claude maintains complete conversation history until limits are reached, then provides clear feedback about context exhaustion. This transparent approach enables better conversation planning and reduces unexpected context loss.

Gemini Advanced offers substantial capacity with up to 1 million tokens and maintains 99.7% recall accuracy across the full context window [4]. Google’s implementation includes sophisticated attention mechanisms that preserve performance across the entire context range, making it effective for document analysis and complex reasoning tasks.

Grok operates with smaller consumer-facing limits but compensates through real-time search integration [6]. Rather than relying solely on context window capacity, Grok extends its knowledge through live web search and social media integration, though this may incur additional costs for extensive usage.

Meta’s Llama 4 demonstrates 10 million token capacity in research settings through innovative architectural improvements [5]. While not yet available in consumer applications, this represents a significant advance in context window scaling and suggests future directions for the field.

RAG Integration: Beyond Traditional Context Limits

Modern AI platforms increasingly use Retrieval-Augmented Generation (RAG) to extend effective context beyond technical limitations through intelligent document search and retrieval.

Claude Projects automatically switches to RAG when approaching context limits [7]. Files initially consume tokens when loaded, but the system transitions to search-based retrieval, expanding effective capacity up to 10x while maintaining response quality. This approach shifts from static context preservation to dynamic information retrieval.

ChatGPT’s GPTs feature implements vector-based RAG for knowledge files [8]. Files are automatically segmented into chunks (typically 1,024 tokens), then embedded using OpenAI’s text-embedding models. The system supports up to 20 files with 2 million tokens each, enabling substantial knowledge repositories that bypass traditional context limitations.

File format considerations for optimal RAG performance [9]:

- Plain text (.txt): Most efficient for semantic search with clean content structure

- Markdown (.md): Balances structure and simplicity for effective chunking

- JSON (.json): Excellent for structured data and maintaining relationships

- PDF files: Supported but may suffer from extraction and formatting issues

The shift toward RAG systems changes traditional context management strategies. Instead of maximizing token efficiency within fixed windows, users can focus on creating comprehensive, well-structured knowledge bases that support extended interactions through intelligent retrieval.

Strategic Context Management

Effective context window utilization requires understanding platform-specific behaviors and implementing appropriate management strategies.

Information placement significantly impacts performance due to attention patterns in transformer models [10]. Models typically perform better with crucial information at conversation boundaries rather than in the middle of long contexts. Place essential instructions at conversation starts and summarize key findings at natural transition points.

Content structuring improves both comprehension and efficiency [11]. Use clear headings and logical organization, implement structured formatting for complex information, and maintain consistent patterns throughout interactions. Well-organized content uses tokens more efficiently while improving model understanding.

Proactive conversation management involves planning interaction structure, preparing key information for potential reintroduction, and recognizing optimal points for conversation segmentation. This includes defining clear objectives, monitoring conversation coherence, and knowing when fresh conversations better serve specific goals.

Platform-specific optimization strategies:

- ChatGPT Plus: Implement conversation chunking, regularly reinforce key information, and start new conversations for distinct topics

- Claude AI Pro: Monitor context usage indicators, plan natural conversation breaks, and leverage transparent feedback

- Gemini Advanced: Utilize large context capacity for document analysis and maintain extended reasoning chains

- Grok: Leverage real-time search capabilities for current information while managing search costs

Economic Considerations

Understanding context window economics helps inform platform selection and usage optimization decisions.

ChatGPT Plus at $20/month provides 32,000 tokens with sliding window management [2]. The Pro tier at $200/month offers expanded capabilities, representing a significant price increase for enhanced context capacity.

Claude AI Pro’s $20/month subscription provides full 200,000-token access with transparent management [3]. The platform’s predictable behavior and advanced RAG features offer substantial value for professional applications requiring sustained context.

Gemini Advanced at $19.99/month delivers strong value with 1 million token capacity and high recall accuracy [4]. Integration with Google Workspace and included storage add significant value for business users.

Cost-per-token analysis reveals significant differences in value proposition across platforms, with context capacity, management features, and additional capabilities all contributing to overall utility.

Future Developments

The context window landscape continues evolving through technical advances and new architectural approaches.

Technical improvements in attention mechanisms promise to address current scaling limitations [12]. Research into sparse attention and linear attention algorithms could dramatically expand practical context limits while reducing computational requirements.

Enhanced management tools are emerging to help users optimize context usage automatically [13]. Visual interfaces, real-time monitoring, and intelligent segmentation tools promise to make context window management more accessible.

Hybrid approaches combining large context windows with RAG systems may provide the most effective solution, offering both sustained attention and access to vast knowledge repositories.

Conclusion

Context windows represent both opportunity and constraint in current AI systems. Success requires understanding platform-specific behaviors, implementing appropriate management strategies, and optimizing for specific use cases.

The significant differences between platforms—from ChatGPT Plus’s 32,000 tokens to research demonstrations of 10 million tokens—require tailored approaches for maximum effectiveness. Users who understand these differences can make informed platform choices and optimization decisions.

Key principles for effective context window management: understand platform behaviors and limitations, implement proactive conversation planning, optimize content for clarity and efficiency, and develop cross-platform strategies for maximum flexibility.

As AI systems evolve, context window management remains a critical skill for maximizing productivity. The techniques and platforms discussed here will continue developing, but the fundamental principles of efficient context management will remain valuable regardless of technological advances.

References

[1] IBM. What is a context window?

[2] Fdaytalk. ChatGPT Plus Context Window: 32K Tokens Limit Explained

[3] Anthropic. Context windows – Anthropic

[4] Google DeepMind. Gemini 2.5 Pro

[5] Meta AI. The Llama 4 herd: The beginning of a new era of natively multimodal AI innovation

[6] xAI. Grok 3 Beta — The Age of Reasoning Agents

[7] Anthropic Help Center. Retrieval Augmented Generation (RAG) for Projects

[8] OpenAI Help Center. Retrieval Augmented Generation (RAG) and Semantic Search for GPTs

[9] OpenAI Developer Community. GPTs – best file format for Knowledge to feed GPTs?

[10] Synthesis AI. Lost in Context: How Much Can You Fit into a Transformer

[11] Tilburg AI. Quality over Quantity: 3 Tips for Context Window Management

[12] arXiv. Advancing Transformer Architecture in Long-Context Large Language Models

[13] All About AI. How does Context Window Size Affect Prompt Performance of LLMs?

Appendix: Additional Resources

NotebookLM – Advanced Document Analysis

Google’s NotebookLM leverages a 2M token context window with support for up to 25M words through advanced summarization techniques. The platform implements Gemini 1.5 Pro embeddings with dynamic chunking and multimodal understanding across text, images, and video content. Particularly effective for research and educational applications requiring comprehensive document analysis.

File Formats – Detailed Guide

Optimal Formats:

- Plain text (.txt): Efficient processing, precise chunking, ideal for semantic search

- Markdown (.md): Clear structure, preserved hierarchy, excellent for documentation

- JSON (.json): Structured data, maintained relationships, perfect for catalogs

- CSV (.csv): Tabular data, compatible with data analysis

Best Practices:

- Structure with clear headers and logical organization

- Use consistent formats across all documents

- Divide large documents into thematic files

- Include relevant keywords in section headers

- Avoid excessive formatting that may interfere with extraction

- Convert PDFs to markdown or plain text for better results

Quick Reference Card: 10 Best Practices for Context Window Optimization

🎯 Essential Actions for Maximum Context Efficiency

- Place critical information at conversation boundaries

Put essential instructions at the start and key summaries at natural break points - Use clear headings and structured formatting

Organize content with logical sections, bullet points, and consistent patterns - Monitor token consumption actively

Track usage patterns and optimize language for conciseness without losing clarity - Implement conversation chunking strategies

Break complex tasks into segments and start fresh conversations for new topics - Optimize file formats for RAG systems

Use plain text (.txt) or markdown (.md) for best semantic search results - Leverage platform-specific features

Use Claude Projects for document analysis, GPTs for custom knowledge, Gemini for large contexts - Prepare key information for reinjection

Keep summaries of important points ready to reintroduce in new conversation segments - Choose platforms based on context needs

Match platform capabilities (32K-1M tokens) to your specific use case requirements - Structure knowledge bases strategically

Create well-organized, searchable document collections with clear naming conventions - Plan conversation objectives in advance

Define clear goals and recognize optimal points for conversation transitions

💡 Pro Tip: Combine multiple platforms for maximum flexibility—use different tools for different tasks based on their unique context window strengths.

Discover our Free AI* Assistants here: https://onedayonegpt.com/

*AI may make errors. Verify important information. Learn more

LEARN MORE ABOUT CUSTOM AI ASSISTANTS ON CLAUDE and CHATGPT HERE: Custom AI Assistants ChatGPT Claude GUIDE 2025 by OneDayOneGPT