The Enterprise AI Paradox: 8 Critical Questions That Separate Winners from Expensive Mistakes

Why 92% of companies plan AI investments but only 1% achieve maturity—and how to join the successful minority

Executive Summary: The Strategic Imperative

[1] McKinsey research reveals that while 92% of companies plan to increase AI investments, only 1% consider themselves “mature” in deployment. This chasm represents an unprecedented opportunity for strategic leaders willing to implement comprehensive AI frameworks that balance technological advancement with human wisdom, economic efficiency with sustainable growth, and innovation with responsible stewardship.

The $20 Paradox That’s Puzzling Enterprise Leaders

Picture this boardroom conversation happening across corporate America: “We can get revolutionary AI capabilities for $20 per month—the cost of a business lunch. What’s the catch?”

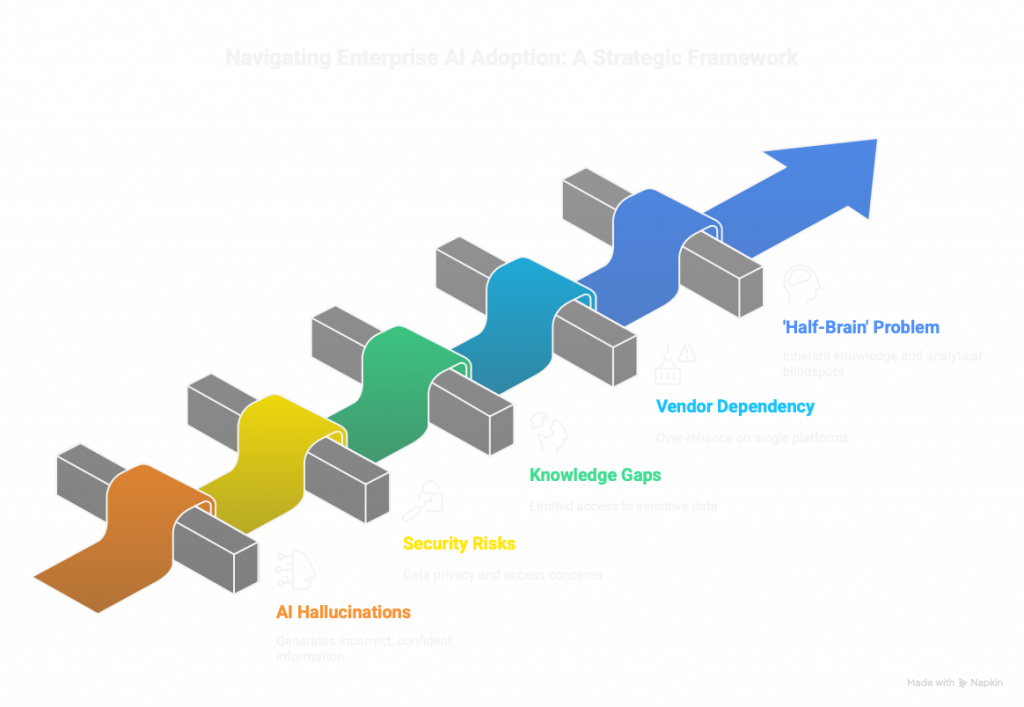

The catch, as it turns out, isn’t in the price tag. It’s in the persistent challenge of AI hallucinations that give prudent organizations legitimate pause. Current AI systems generate convincing but potentially incorrect information with such confidence that rushing into deployment without proper oversight can prove costlier than delayed adoption.

[1] McKinsey research reveals a striking paradox: while 92% of companies plan to increase AI investments, only 1% consider themselves “mature” in deployment. This isn’t a technology gap—it’s a wisdom gap. The AI finance market itself may experience dramatic growth or significant correction, making thoughtful, gradual implementation the wiser strategic path.

However, carefully overseen custom AI assistants represent a measured approach to capturing genuine value while mitigating risks. Unlike the breathless predictions of closing windows and competitive moats, the reality is more nuanced. This comprehensive analysis addresses the eight critical questions that separate sustainable AI adoption from costly missteps, providing every CEO, function manager, and academic the balanced framework needed to cultivate transformational AI capabilities while maintaining appropriate human oversight and organizational prudence.

Question 1: What Exactly Are Custom AI Assistants and How Do They Fundamentally Differ from Generic Chatbots?

Custom AI assistants represent a paradigmatic shift from reactive chatbots to adaptive business partners, much like the evolution from industrial monoculture to permaculture systems that respond dynamically to their specific environment. [3] Built upon large language models such as ChatGPT GPT-5 and Claude Opus 4.1 and Sonnet 4, these systems combine preset instructions with organizational knowledge bases to address specific business challenges.

Unlike generic chatbots that respond with broad, publicly-trained information, custom assistants leverage your organization’s data, policies, and procedures to provide contextually relevant solutions that reflect institutional knowledge and culture.

The architectural framework encompasses three fundamental components that mirror natural ecosystem structures: sophisticated instruction sets that define behavior and scope (analogous to environmental constraints), comprehensive knowledge databases containing company-specific information (equivalent to organizational memory), and optional connectivity to web resources or action-triggering systems (representing external interface capabilities).

[4] However, treating these systems like highly capable interns requiring supervision rather than expert consultants remains crucial for successful deployment. This analogy proves particularly apt—you wouldn’t permit an intern to distribute press releases without supervisory oversight, and precisely the same principle applies to AI outputs requiring human validation.

Context Window Strategic Analysis

The context window capabilities have reached a critical inflection point:

- ChatGPT Plus: 32,000 tokens standard / [11] 196,000 tokens with GPT-5 Thinking (approximately 24,000-147,000 words) – tactical to strategic scope

- Claude Pro: [2] 200,000 tokens (roughly 150,000 words) – comprehensive strategic planning

The GPT-5 Thinking capability significantly expands ChatGPT Plus analytical capacity, making it competitive with Claude Pro for complex strategic analyses. This evolution directly impacts platform selection decisions and determines which platform suits specific enterprise applications—rather like selecting appropriate tools for different scales of environmental assessment.

Question 2: When Should We Deploy Custom AI Assistants for Maximum Strategic Impact?

Strategic deployment centers on identifying repetitive or knowledge-intensive tasks that consume substantial human intellectual capital—precious resources that could be redirected towards innovation, relationship building, and strategic thinking. The economic threshold proves remarkably accessible: saving merely 20 minutes monthly justifies the $20 monthly investment, creating immediate positive returns while reducing cognitive burden on human resources.

[1] McKinsey’s research reveals that despite widespread AI optimism, only 19% of executives report revenue increases exceeding 5% from AI initiatives, whereas 39% see moderate increases. This performance gap highlights the critical importance of strategic implementation over mere technological adoption.

[5] Enterprise functions often cited as promising—IT automation for technical troubleshooting, customer service for routine inquiry resolution, finance for error reduction and accelerated decision-making, and human resources for administrative efficiency enhancement—can also deliver misleading early wins: hallucination behavior can vary across models and updates, which can skew early assumptions.

Prioritize more controllable, human-in-the-loop (HITL) tasks: draft-then-review responses, checklist-driven triage, retrieval-augmented lookups with source citations, and exception-only automation—so humans approve outputs before action. Adopt a simple policy: “prepare → human approve → execute,” and maintain audit logs (acceptance rate, correction rate, override reasons) for continuous quality tracking.

The decision criteria framework evaluates impact versus complexity through matrix analysis, prioritizing high-impact, moderate-complexity initiatives for initial deployment. Optimal scenarios include customer behavior analysis in finance, policy interpretation in HR, technical documentation in operations, and market intelligence gathering in strategy departments. These applications leverage AI’s capacity for instant information retrieval while preserving human expertise for relationship management, strategic initiatives, and ethical decision-making that require emotional intelligence and moral reasoning.

Question 3: How Do ChatGPT Plus and Claude Pro Compare for Building Sustainable Enterprise AI Ecosystems?

Platform selection determines deployment success through fundamental architectural differences that mirror the distinction between different technological ecosystems and their carrying capacity. [2] ChatGPT Plus excels at creating standardized, shareable assistants with robust version management capabilities, while Claude Pro provides superior customization for complex internal workflows requiring extensive contextual understanding.

The context window capabilities now present strategic parity: [11] ChatGPT’s GPT-5 Thinking mode provides 196,000 tokens (though availability for custom GPTs may vary), whereas Claude’s 200,000-token capacity enables comprehensive analysis of entire business ecosystems without fragmentation.

| Strategic Capability | ChatGPT Plus | Claude Pro |

|---|---|---|

| Context Window | 32,000 tokens standard / 196,000 tokens with GPT-5 Thinking (strategic scope capability) | 200,000 tokens standard capacity (comprehensive strategic scope) |

| Process Complexity | 4-5 steps recommended standard / Enhanced with GPT-5 Thinking | Up to 10 steps optimal / Consistent complex workflow handling |

| Reasoning Capabilities | GPT-5/o3 model advanced reasoning / Multi-step problem solving | Extended thinking available / Constitutional AI safety focus |

| Sharing Ecosystem | [7] Public GPT marketplace / Version management and duplication | Enterprise-only sharing / Team and organization focus |

| Visual Content Generation | ChatGPT gpt-image-1 integration | Code-based visualization / Interactive artifacts system |

Process design recommendations reflect both technical constraints and optimization principles derived from systems thinking. ChatGPT implementations can now accommodate complex workflows with GPT-5 Thinking’s expanded 196,000-token capacity, while Claude can accommodate up to 10-step processes effectively without losing analytical coherence. This evolution fundamentally impacts deployment strategies, with both platforms now suitable for comprehensive, strategic analyses requiring holistic understanding of complex business scenarios. The competitive landscape has shifted with ChatGPT’s enhanced capacity, creating strategic parity in context window capabilities.

Question 4: What Critical Security and Governance Frameworks Must We Implement?

Data security represents the highest-stakes compliance challenge in enterprise AI deployment, requiring frameworks as robust and comprehensive as those protecting sensitive ecological systems from contamination and exploitation. Enterprise-grade implementations must address multiple regulatory jurisdictions simultaneously, with ChatGPT requiring dual-layer security configuration: users must deselect “improve the model for everyone” in both personal settings and individual GPT configurations. The uncertainty regarding setting precedence creates additional vulnerability, as platforms provide limited transparency regarding which configuration takes priority when conflicts arise.

Contemporary security concerns extend beyond traditional data protection to encompass broader questions of institutional autonomy and national security considerations. As observed in practical implementation, the reality remains that comprehensive data privacy in cloud-based AI systems presents inherent challenges, with potential governmental access pathways that organizations cannot definitively exclude from risk assessments. This reality necessitates implementing AI systems with appropriate classification levels and avoiding processing of genuinely sensitive strategic information through public cloud platforms.

[2] Enterprise governance frameworks mandate comprehensive approaches addressing data classification hierarchies, access control matrices, regulatory compliance verification across multiple jurisdictions, audit trail maintenance with forensic capabilities, and performance monitoring with ethical oversight. [8] Claude Pro offers enhanced privacy protections with explicit commitments that project data will not train models without user consent, whereas ChatGPT’s enterprise plans provide similar protections requiring careful configuration management.

Critical Recommendation

Keep custom AI assistants read-only for now—do not grant direct write access to production databases (via connectors or Zapier). Given ongoing hallucination and instruction-following variability, route any data changes through a human-in-the-loop step (AI drafts → human approves → system writes). The trade-off is less end-to-end automation today, but you still gain time on repetitive tasks while protecting data integrity and compliance.

Risk Synthesis

The immediate danger is not malevolent super-intelligence but confident systems making human-scale mistakes at machine speed. A single misreading—”archive old files” interpreted as “remove everything created before today”—or a hallucinated field value that overwrites a customer record can cascade into operational failure. More insidious is prompt injection: invisible instructions embedded in websites or documents that persuade an agent to ignore prior controls and act with your permissions, effectively turning an external page into an insider threat.

Add the twin risks of sensitive-information disclosure and data poisoning, and the case for cautious, gated automation becomes overwhelming. In practice, the only sustainable posture today is skeptical permissioning and explicit human oversight: default-deny scopes, read-only by default, retrieval-with-citations, and the “prepare → human approve → execute” control loop with auditability.

Question 5: How Do We Design Knowledge Ecosystems That Encourage Innovation Rather Than Pattern-Locked Conformity?

Knowledge base optimization requires balancing comprehensiveness with diversity, much like maintaining biodiversity in natural systems and preventing invasive species dominance that reduces ecosystem resilience. AI systems demonstrate inherent pattern-reproduction tendencies: providing extensive examples creates over-reliance on those specific samples, resulting in repetitive outputs that lack analytical variability and creative problem-solving capacity.

The optimal approach emphasizes quality over quantity, utilizing carefully curated, high-relevance documents rather than comprehensive information dumps that may overwhelm system capabilities. Prefer guidelines over narrow examples: use principle-led instructions and varied, non-repetitive samples. Overloading the system with highly specific examples teaches pattern mimicry, risks template-locked outputs, and can contaminate long-lived knowledge bases. Keep permanent knowledge principle-driven; keep example-heavy prompts ephemeral.

Strategic design principles: structure matters. [2] Well-organized, clearly labeled, hierarchical documents consistently outperform unstructured dumps. Currency management keeps content relevant and prevents propagation of outdated guidance, similar to how healthy ecosystems cycle nutrients while eliminating toxins that impede growth. Access control alignment must reflect organizational data governance and security policies, creating sustainable information flows that support decision-making without compromising operational security or competitive intelligence.

Testing methodologies prove essential for ensuring output variability and preventing the technological equivalent of monoculture thinking that reduces organizational adaptability. The pattern-based nature of AI systems means they will consistently reference provided examples, potentially creating monotonous outputs if knowledge bases lack sufficient diversity. General instructions typically outperform specific directives, except in cases involving strong, standardized processes where derivative approaches prove beneficial for regulatory compliance or quality assurance applications.

Question 6: What Are the Real Economic Implications and How Do We Build Sustainable Investment Models?

Total cost of ownership analysis reveals complexity far beyond the $20 monthly subscription fees, requiring long-term economic thinking similar to sustainable infrastructure investment that considers lifecycle costs, maintenance requirements, and adaptation capacity. Enterprise deployments require substantial initial investments for clear corporate AI rules of use (data classification: what can and cannot be placed into AI requests), implementation, comprehensive training programs, an infused AI culture, and sophisticated system integration that ensures AI capabilities complement rather than disrupt existing workflows and human expertise networks.

The economic model mirrors sustainable development principles where initial investment costs are balanced by long-term productivity gains, resource optimization, and enhanced competitive positioning. Professional services typically represent significant multiples of software licensing costs, covering implementation expertise, customization requirements, and organizational training programs that ensure effective human-AI collaboration. Infrastructure expenses encompass cloud computing resources, integration development, and ongoing maintenance requirements that support continuous improvement and adaptation to evolving business needs.

Return on investment manifests through multiple value streams that compound over time, creating positive feedback loops characteristic of healthy economic ecosystems. Direct cost reduction through automation of routine processes frees human resources for strategic initiatives requiring creativity, relationship management, and ethical reasoning. Resource efficiency improvements reduce operational waste and enhance output quality and consistency. Error reduction in routine operations improves customer satisfaction and operational reliability, creating reputational value that translates into competitive advantage and market positioning strength.

Operational requirement: maintain human control of AI outputs (HITL approval) as a mandatory gate until measured hallucination/error rates fall below 0.1% on a fixed, representative internal evaluation set for at least three consecutive review cycles.

Question 7: How Do We Address the ‘Half-Brain’ Problem and Build Resilient, Truth-Seeking AI Systems?

[4] The ‘half-brain’ phenomenon represents perhaps the most significant challenge in enterprise AI deployment, requiring honest acknowledgment of technological limitations while maximizing available capabilities for legitimate business applications. Current AI systems operate with inherent knowledge gaps, trained on publicly available data while restricted from accessing sensitive topics including corruption analysis, aggressive tax optimization strategies, and controversial business practices. This limitation creates analytical blind spots that can fundamentally compromise strategic decision-making processes requiring comprehensive situational awareness and competitive intelligence gathering.

Hallucination risks constitute the primary reliability concern, where AI systems generate convincing but factually incorrect information with apparent authority and confidence. This phenomenon reflects the broader challenge of maintaining truth and accuracy in complex information ecosystems where validation mechanisms may be inadequate or compromised.

“The non-deterministic nature of LLMs means they can produce different answers to identical questions depending on server loads and token processing variations. This fundamental characteristic makes traditional performance metrics challenging to establish and requires new approaches to quality assurance and reliability assessment.”

Mitigation strategies require approaches as sophisticated as environmental monitoring systems that detect and respond to threats while maintaining ecosystem health. Real-time fact-checking against authoritative sources provides immediate verification capabilities. Confidence and uncertainty scoring for AI-generated responses should enable human-in-the-loop intervention at predefined risk/uncertainty thresholds, with higher-impact actions requiring stricter review.

Professional skepticism becomes essential, with organizations requiring complete output verification for:

- Critical business decisions that affect strategic direction, regulatory compliance, or competitive positioning

- Any output likely to affect reputation (customer handling and support messages, external communications and press releases, pricing or policy-change notices, HR or legal correspondence, incident/ops status updates, social media and website copy, and investor/partner communications)

- Any output that will be reused downstream in AI chains or normal business processes (feeding RAG indexes, updating CRM/ERP records, triggering ticketing/workflows, or syncing data warehouses)

For public-facing items, require dual approval (business owner + compliance/PR) with an auditable record of review; for downstream uses, require a staging environment, data lineage/checksums, and defined rollback paths.

Question 8: What Happens When Technology Vendors Evolve Their Platforms or Market Dynamics Shift Fundamentally?

Platform dependency represents a strategic vulnerability requiring comprehensive risk management planning, similar to avoiding over-dependence on single suppliers in sustainable business ecosystems that value resilience and adaptability. Recent model deprecation events demonstrate this challenge: vendors regularly update, modify, or discontinue model versions, creating potential workflow disruption and performance changes that may fundamentally alter system capabilities without advance warning or migration support.

Platform Resilience Strategy

Being able to shift between vendors and models is key. Design for portability and resilience: use abstraction layers (adapter pattern) to decouple business logic from model APIs; keep prompts, tools, and RAG configurations source-controlled and vendor-neutral; maintain a cross-model evaluation suite; and keep a standing exit plan (dual-sourcing, shadow deployments, fast rollbacks) to absorb deprecations, pricing shifts, or policy changes.

The alternative platform ecosystem includes multiple options for organizations seeking to reduce vendor dependency risks. Poe.ai enables custom bot creation but presents data security questions when sharing resources with other users. [9] Perplexity AI offers “Spaces” functionality for custom AI assistants with some template support, though context limitations may restrict complex applications. OpenRouter provides aggregated API access to multiple models through pay-per-token pricing, though this requires technical expertise for implementation and management.

[1] Strategic considerations for technology evolution favor organizations implementing comprehensive AI capabilities while maintaining adaptability and vendor diversification. The shift from experimental to operational AI funding demonstrates market maturation favoring strategic, sustainable deployment over continued experimentation. However, an approach of providing access to specialized AI assistants built in-house represents an alternative strategy: rather than developing internal capabilities, organizations can leverage pre-built, expert-designed solutions that work immediately without extensive prompt engineering or customization effort.

Building Antifragile AI-Human Ecosystems: A Strategic Implementation Framework

Set AI risk tolerance and objectives; codify AI rules of use (Red/Amber/Green data classification—what can and cannot go into prompts or training); default read-only access (no direct writes to production); human-in-the-loop approval for all actions; require output control until measured hallucination/error rates fall below 0.1% on a fixed internal evaluation set for three consecutive cycles; define roles and governance (executive sponsor, product owner, data owner, security, legal, and employee representatives/works council); prepare communications and training (prompt hygiene, privacy/IP, bias & safety); establish telemetry and evaluation datasets; complete DPIA/security review and vendor assessment; and seed an AI-infused culture (opt-in champions to ChatGPT Enterprise and Claude Enterprise plans; safe sandboxes; office hours; a community of practice; and a recognition program) with clear incentives and feedback loops.

Optional organizational track: stand up a cross-functional “strike team” that operates in parallel to the infusion and traditional teams. Include department and function leads with decision rights. Mandate: unblock pilots, standardize guardrails, resolve policy exceptions, and accelerate go/no-go decisions. Operating model: 2-week sprints, daily 15-minute stand-ups, shared dashboard (cost, quality, risk), and an executive sponsor for rapid escalations. KPIs: time-to-decision, acceptance rate, correction/override rate, and hallucination/error rate (<0.1% target). Deliverables in the first 30 days: pilot inventory and prioritization, RAG data policy, HITL checklist, and a lightweight rollout/communications pack.

Develop internal expertise; identify high-impact, low-complexity use cases; establish governance frameworks; run ultra-low-cost pilots (≤2 weeks, ≤$1k or equivalent credits) with clear success metrics; choose go/no-go for each pilot; capture learnings and risks.

Scale go decisions; harden guardrails and HITL workflows; implement focused training; iterate on prompts/RAG and workflows; expand telemetry and cost/performance dashboards; choose go/no-go for each retained project.

Enterprise-wide deployment for validated use cases; define SLOs and quality gates; integrate with production via read-only + approval flows; ensure vendor portability (adapters, dual-sourcing); realize competitive advantage; run go/no-go reviews for each implemented project (invest more, invest less, or divest).

Continuous improvement; re-test on a fixed evaluation set each release; monitor drift and hallucination/error rate (<0.1% target); quarterly model/vendor reviews; maintain kill-switches and rollback rehearsals; technology evolution adaptation.

The Strategic Imperative

Custom AI assistants represent transformational infrastructure requiring implementation strategies that balance technological capability with human wisdom, economic efficiency with social responsibility, environmental sustainability with innovation velocity, and competitive advantage with ethical stewardship. Successful deployment demands comprehensive understanding of platform capabilities, rigorous security frameworks, systematic change management, and realistic expectations regarding AI constraints while optimizing human potential and preserving organizational values.

The ecological parallel proves instructive: like thriving natural ecosystems, successful AI implementations require diversity, resilience, symbiotic relationships between technological and human elements, and adaptive capacity that enables evolution without compromising core stability. The economic imperative grows increasingly compelling, with early adopters positioned to achieve measurable advantages while demonstrating responsible stewardship of both technological and human resources. The humanistic element remains paramount—technology serves humanity most effectively when designed with empathy, oversight, and deep respect for both capabilities and limitations.

[1] McKinsey research reveals the persistent gap between AI investment and realization: despite widespread adoption planning, only 1% of enterprises achieve operational maturity. This chasm represents both challenge and opportunity for strategic leaders willing to implement comprehensive frameworks that prioritize human-AI collaboration over mere technological adoption. The organizations achieving sustainable competitive advantage combine technological sophistication with human wisdom, treating AI as powerful augmentation rather than replacement for human expertise, creativity, and moral reasoning capabilities.

Implementation success depends upon organizational readiness, strategic vision, and unwavering commitment to comprehensive change management that honors both technological potential and human dignity. The most effective deployments begin with clear objectives, realistic timelines, robust governance frameworks ensuring ethical AI deployment, and systematic approaches to capability building that strengthen rather than diminish human potential. As these systems evolve, maintaining focus on human augmentation rather than replacement ensures sustainable value creation and preserves the essential human elements—creativity, empathy, ethical reasoning, and relationship management—that drive organizational innovation and social responsibility in an increasingly connected world.

References

Pingback: Custom AI Assistants vs Autonomous Agents: Control > Chaos

Pingback: 10 AI Prompt Templates That Boost Productivity for ChatGPT